Audio Features API for songs

The Soundcharts Audio Features API lets developers, distributors, and music data teams extract structured musical descriptors — BPM, key, time signature, energy, valence, danceability, and more — for millions of tracks. Instead of maintaining your own audio analysis pipeline, you can query a single endpoint to power recommendation systems, harmonic mixing tools, mood-based playlists, and music analytics dashboards with consistent, ready-to-use audio features.

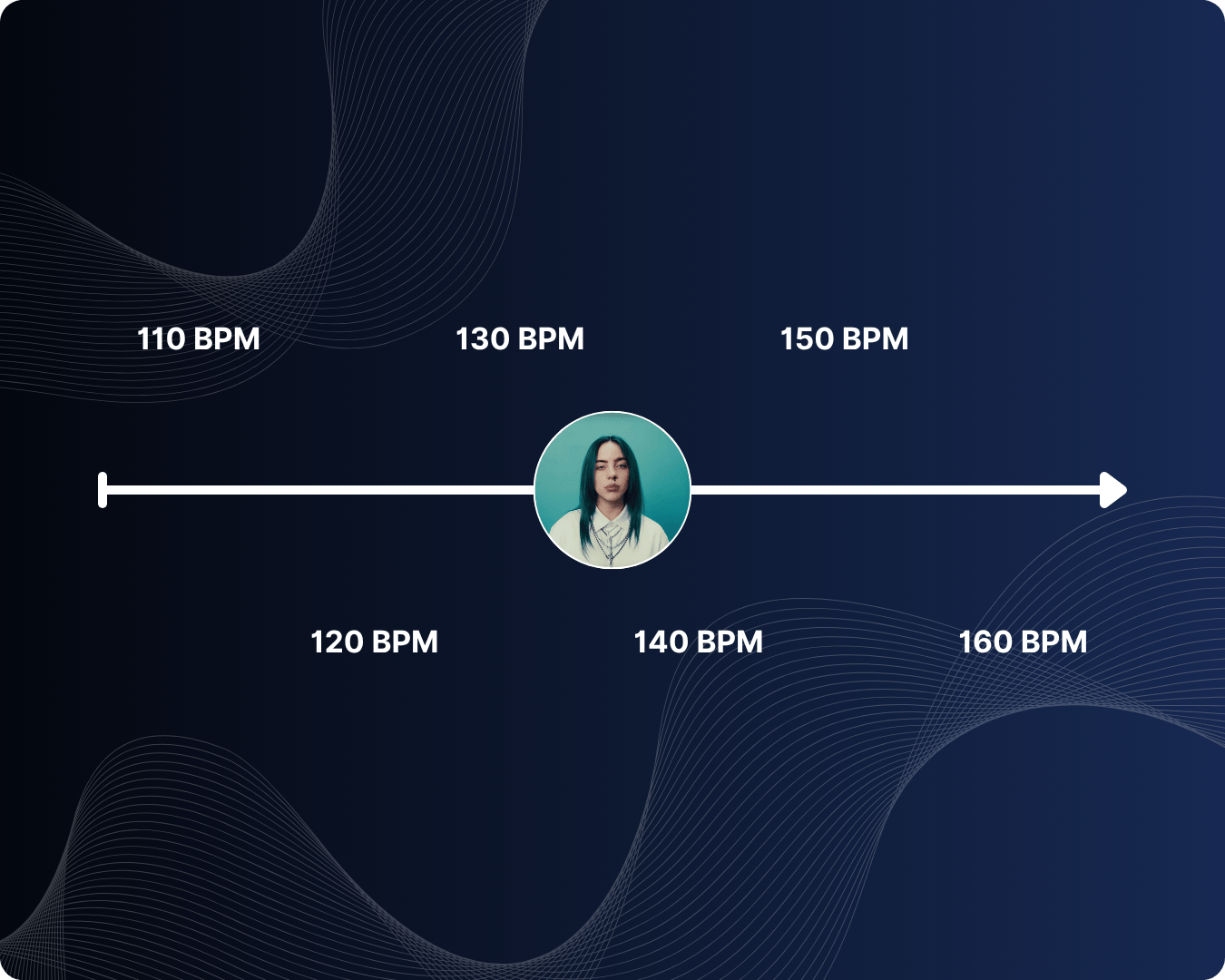

Measure song BPM

Use the Soundcharts BPM API to retrieve precise tempo values (in BPM) for millions of tracks in a single request. Consistent BPM data helps control transitions, pace, and energy.

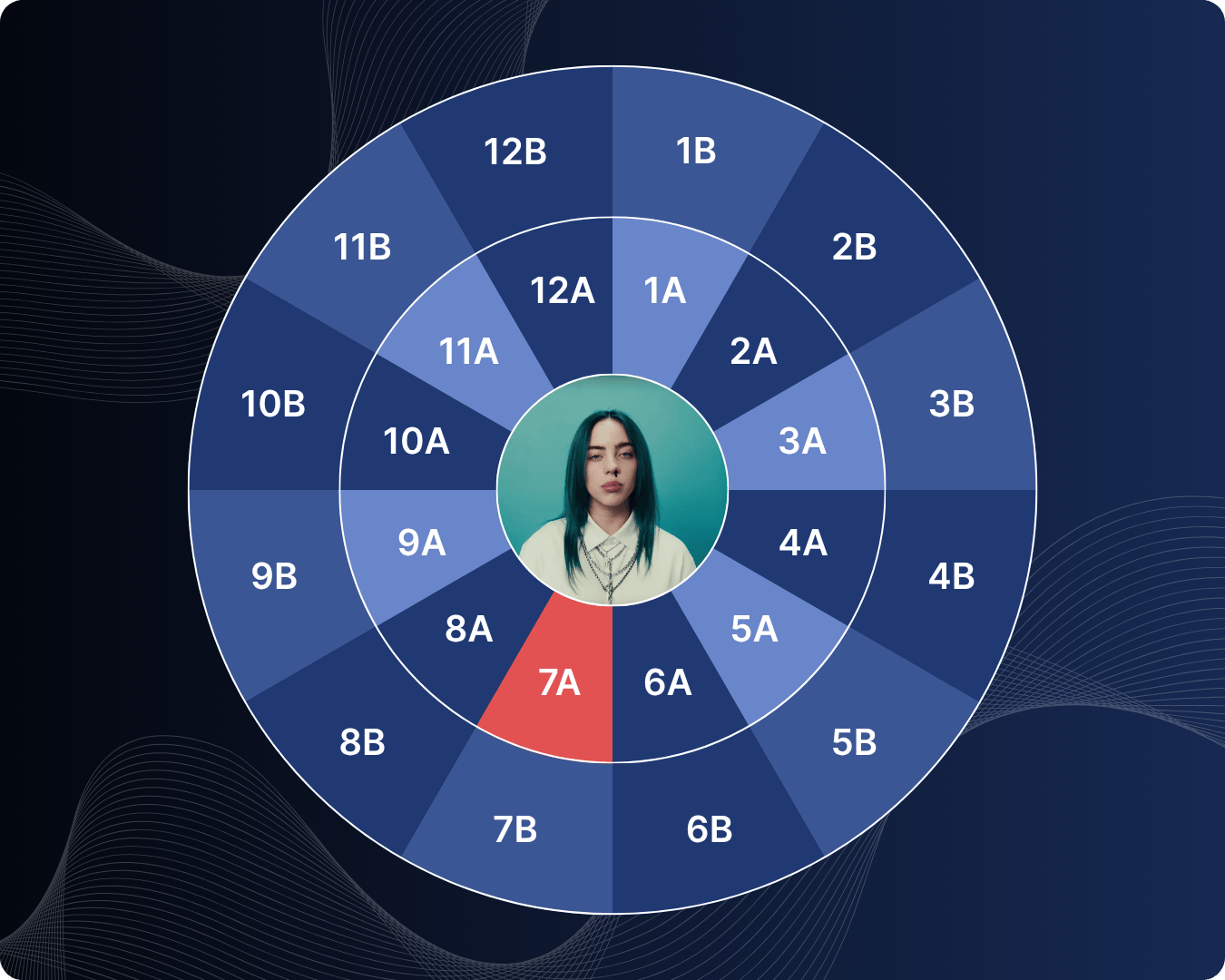

Get song key

Our key API lets you detect the musical key of any song, so your product can understand how tracks relate harmonically. Use key and mode to power chord and scale helpers, songwriting assistants, or smart recommendations that cluster songs by musical compatibility instead of just genre or popularity. The key is exposed as a simple integer (0–11), which you can easily integrate into your existing data models.

Analyze song time signature

With the time signature API, you can identify whether a song is in 3/4, 4/4, 5/4, 7/4, or other meters and use that structure in your logic. Time signatures are critical for composition tools, educational apps, sync and production workflows, or any feature that relies on bar-based. Soundcharts returns an estimated timeSignature value per track, making it easy to segment audio, align visualizations, and design features that respect the song’s rhythmic grid.

Discover songs using audio features

Use audio features to go beyond simple text or genre search and actually find songs that match a specific vibe. Filter or rank tracks by BPM, key, time signature, energy, valence, and danceability to surface songs that work together musically.

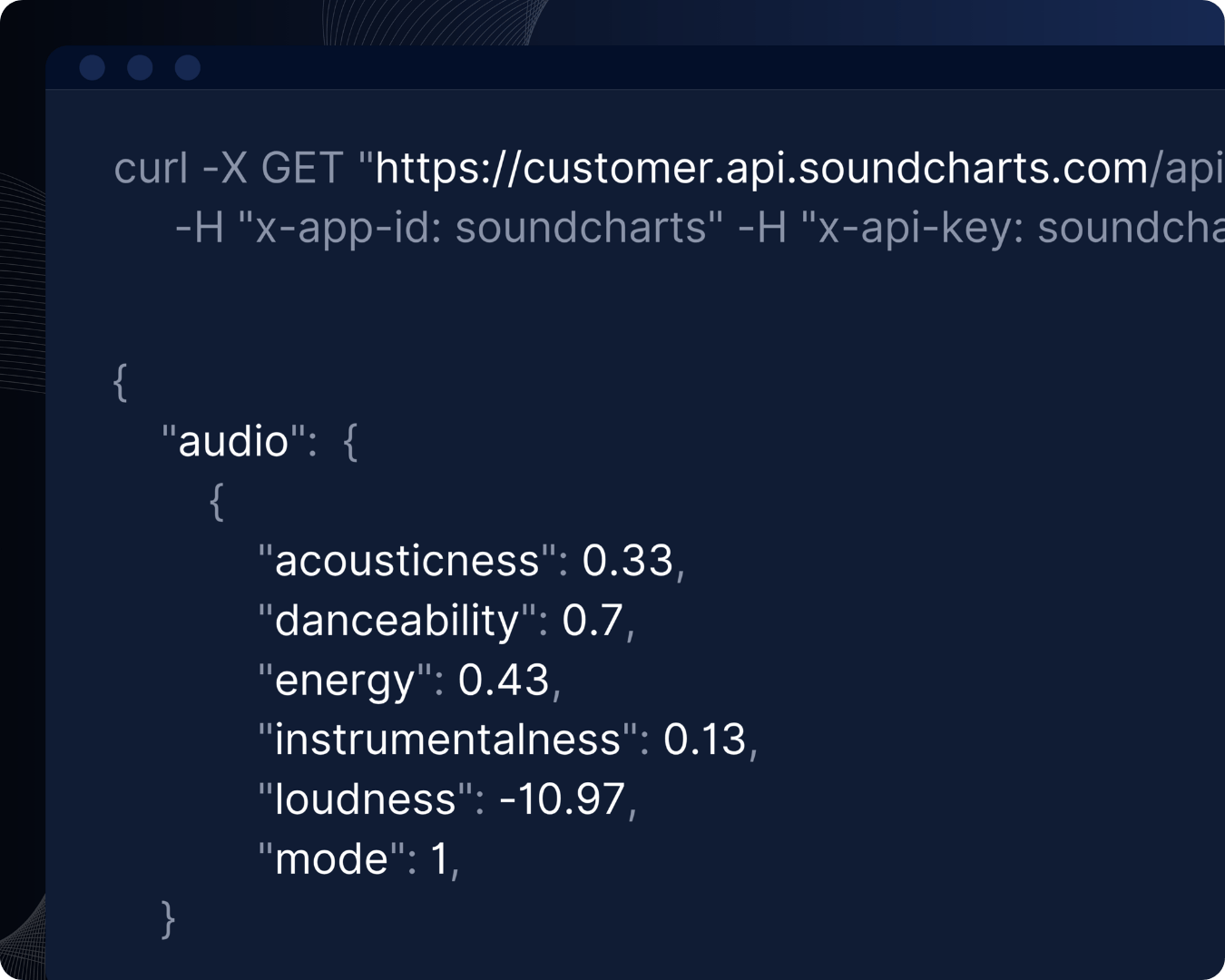

Go beyond BPM with a complete Audio Features API

The Soundcharts Audio Features API provides a full set of high-level descriptors for every track: energy, valence (mood), danceability, acousticness, instrumentalness, speechiness, liveness, loudness, tempo, key, mode, and time signature. These audio features help you move from raw audio to actionable insights — clustering songs by vibe, mood, or intensity instead of relying only on tags or genres.

Integrate audio features into your existing stack

All audio features are available from the same endpoint you already use for song data, alongside identifiers like UUID and ISRC. That means you can plug the Audio Features API into your catalog, dashboards, or internal tools without redesigning your architecture. Fetch a song once, store BPM, key, time signature, and other features in your own database, and combine them with charts, playlists, or audience data to unlock richer analysis and more intelligent music products.

Access audio features for millions of songs

Use BPM, key, time signature, and danceability data to enrich catalogs, drive recommendations, and build smarter music products via a single API.

Common questions about Popularity scores

What are audio features in music?

Audio features are numerical descriptors that capture how a track “sounds” — for example tempo (BPM), key, time signature, energy, valence (mood), danceability, acousticness, or instrumentalness. They turn raw audio into structured data that can be used for recommendations, playlists, analytics, and machine learning models.

What is BPM in music, and how is it measured?

BPM (beats per minute) measures the tempo of a song — the number of beats that occur in one minute. In the API, tempo is returned as a single numeric value per track, so you can easily filter, sort, or group songs by speed (e.g. 90–110 BPM for chill, 120–140 BPM for workout).

What are time signatures, and why do they matter for songs?

A time signature describes how beats are grouped in a bar (for example 3/4, 4/4, 5/4, or 7/4). Knowing the time signature is essential for bar-based editing, looping, visualizations, educational tools, and any feature that needs to align actions with the natural rhythmic grid of the song.

What audio features (BPM, key, time signature, energy, valence, etc.) does the API provide?

The Audio Features API exposes tempo (BPM), key and mode, time signature, loudness, energy, valence, danceability, acousticness, instrumentalness, speechiness, and liveness for each track. These values are returned as structured fields so you can store them, query them, and combine them with other music data in your own systems.

Do I need to upload audio files, or can I get BPM, key, and time signature for existing songs via IDs (ISRC, Spotify, Apple Music, etc.)?

You don’t need to upload audio files. You can retrieve audio features for existing songs by using standard identifiers such as UUID, ISRC, or platform IDs (e.g. Spotify, Apple Music) to resolve a track, then calling the song metadata endpoint to access BPM, key, time signature, and other features.

Access audio features for millions of songs

Use BPM, key, time signature, and danceability data to enrich catalogs, drive recommendations, and build smarter music products via a single API.